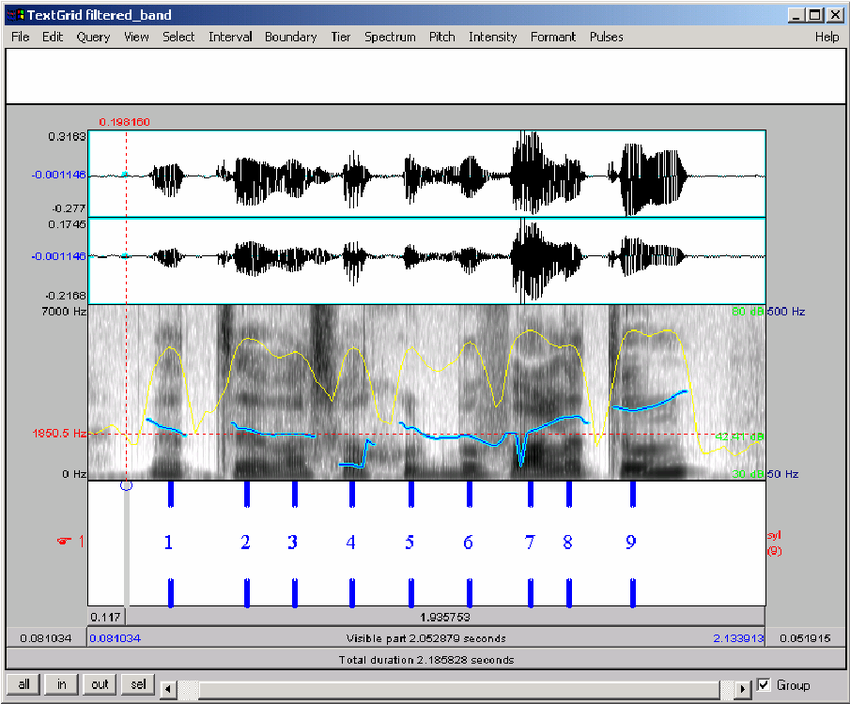

Solution: Secondary research that also informed my designed decisions

To ensure that there was theory and inquiry behind the development, I borrowed pedagogical principles from the field of Applied Linguistics and usability principles from the field of Human-Computer Interaction (HCI) to develop Pitch Noodle.

CAPT Pedagogical Guidelines (Neri et al., 2002)

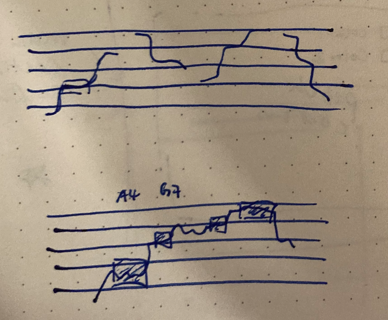

Defined as a learner’s access to diverse and accurate samples to the target language. The development of new phonetic categories must be derived from available exemplars, hence the need for authentic input.

Defined as a space for learners to produce speech in order to test their hypothesis on new phonetic sounds. This way, learners are able to receive proprioceptive feedback on their own performance and make adjustments as necessary.

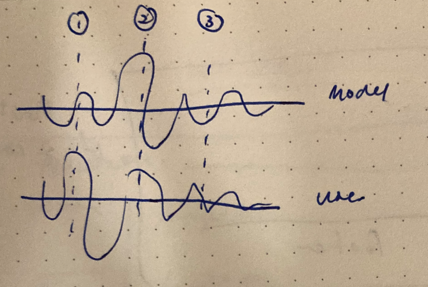

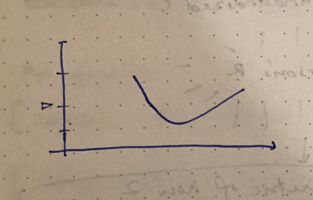

The feedback that helps learners notice the discrepancies between their productions and the target language. According to Schmidt’s (1990) “noticing hypothesis”, it is only through this awareness that can lead to the acquisition of a new sound.

Usability Design Guidelines (ISO 9241-11)

Defined as “the extent to which the intended goals of use of the overall systems are achieved.” In other words, how well do users achieve their goals using the system?

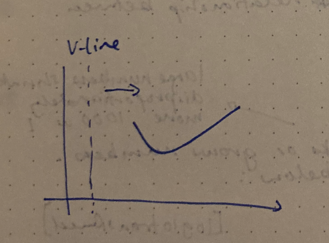

Defined as “the resources that have to be expended to achieve the intended goals”. In other words, what resources (i.e., time, costs, material resources) are consumed to achieve a specific task (e.g., task completion time)?

Defined as “freedom from discomfort, and positive attitudes towards the use of the product.” In other words, how do users feel about their use of the system?