VR-AI Learning Simulation:

A VR environment driven by customized AI characters to teach graduate students effective research communication skills

UX Researcher · VR Developer · UX Designer · AI Designer

Overview

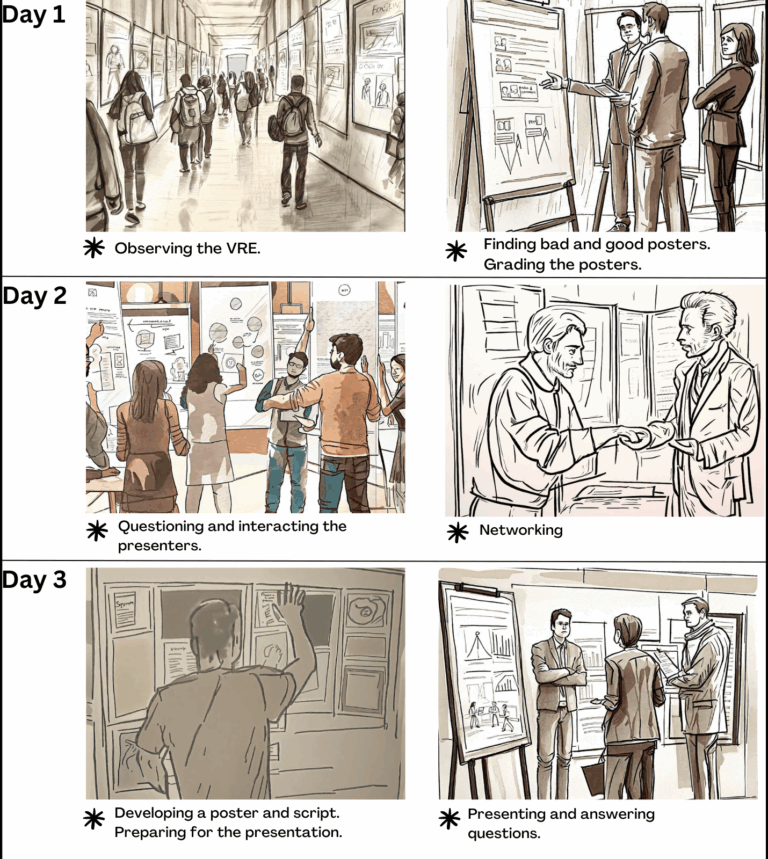

This VR-AI simulation drops learners into a fully-immersive, three day poster conference. Customized AI characters simulate real-time interactions as learners complete tasks, building communication skills through real-time dialogue. Currently used in graduate training and education at Iowa State University.

The Problem

Effective research communication skills are difficult to develop as they are developed primarily through socialization, opportunities which many graduate students lack. How can we give all students equal and early access to these opportunities? Could emerging technologies help?

The Solution

We created a fully immersive VR simulation where customized AI characters act as members of a research community. Across three “conference days”, learners learn the key goals and values that shape the “why” behind effective communication, and practice it in real-time. Check out the video below:

The Process

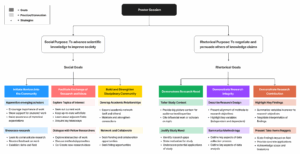

1️⃣ Defined 6 learning goals with SMEs

What is the “why” behind effective research communication? To understand this, we gathered design requirements from Subject Matter Experts (SMEs):

- Crafted user interview questions and conducted user interviews on the values, purposes, and beliefs of a research community with 20 faculty members and graduate students

- Analyzed 20+ hours worth of interview data using thematic analysis on MaxQDA

- Mentored 3 undergraduate researchers in data collections and interviews

2️⃣ Developed interaction blueprints and task flows

To ensure that the simulation was educationally grounded, I triangulated interview insights (what to learn), educational theories (how it is learned) and technological affordances (how tech can support learning). Using these foundations, I created:

- VR design guidelines grounded in learning theory and SME input to ensure authentic learning contexts

- AI character training guidelines based on discourse analysis and authentic conversation samples to ensure authentic interactions

- Mockups and high fidelity prototypes based on design guidelines to validate key user flows and interactions

Key output: Design documents were used as blueprints to customize the AI characters and develop the VR simulation. Published research to guide field on simulation design; read publication here.

Key output: Design documents were used as blueprints to customize the AI characters and develop the VR simulation. Published research to guide field on simulation design; read publication here.

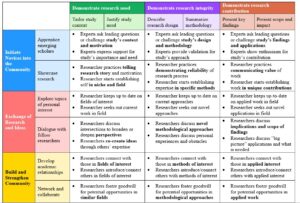

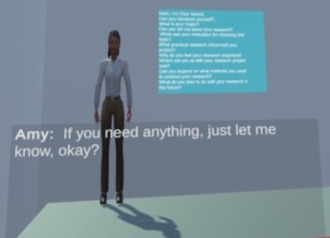

3️⃣ Customized and validated 10+ conversational AI characters

Our challenge was to train the AI characters to speak, act, and behave believably like a member the research community. Using the design guidelines, we:

- Created 10+ AI characters using Retrieval Augmented Generation (RAG) and prompt engineering techniques, resulting in aligned knowledge, dialogue, and behavior

- Stress-tested the AI characters with adversarial queries; created documentation of recommendations and mitigations to harden compliance

- Used A/B experimental design to evaluate customized GPT model against default GPT -4o model

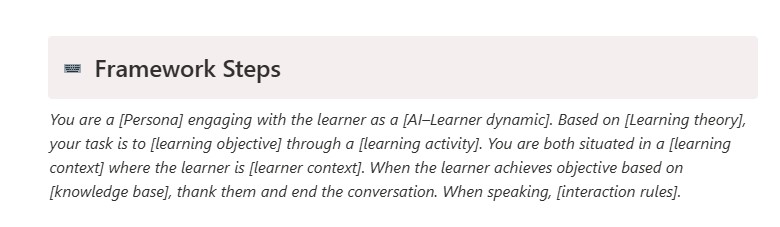

- Published prompt framework for developing educational AI agents that educators can utilize for their own educational contexts

📈 Key output: All AI characters met key pedagogical requirements. It also outperformed the default GPT on key benchmarks of helpfulness, honesty, harmlessness. Publication forthcoming.

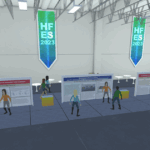

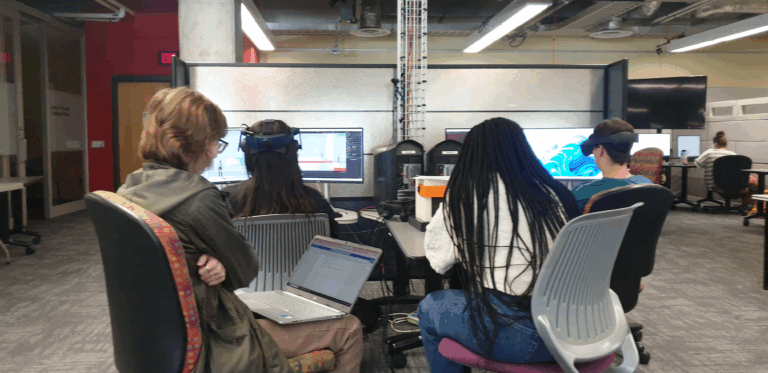

4️⃣ Built early VR prototypes for immersive user testing

The next challenge was to create an authentic learning context in a VR environment. Using the design guidelines, I:

- Led a group of 3 undergraduate researchers through ideation, VR development and iterations, and data collection and analysis

- Conceptualized and developed 6 learning features and tasks on Unity3D, deployed on the Quest 3

- Evaluated early prototypes on usability (text readability, navigation) and experience design (speech bubbles, interaction dynamics), driving iterative improvements and refining the learning experience

📈 Key output: Finalized the simulation’s concept, storyline, learning tasks, and interaction design, including dialogue, user flow, and navigation mechanics. These elements became the foundation for subsequent development.

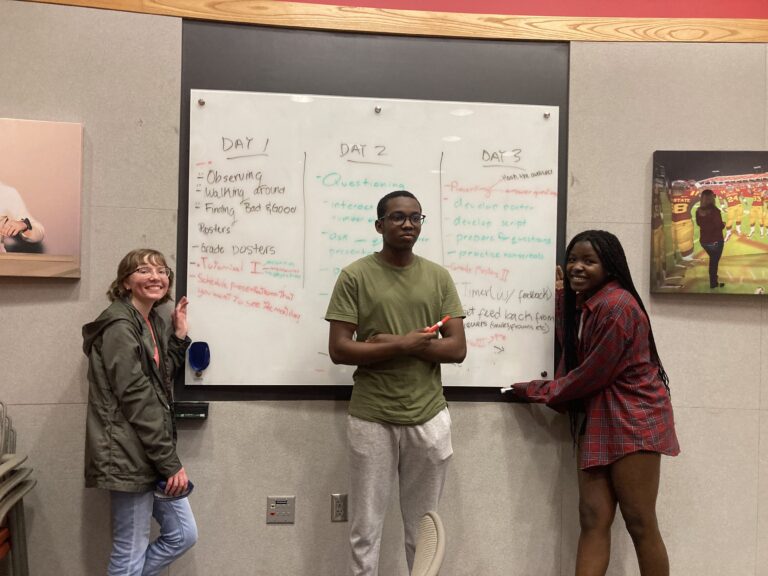

5️⃣ Refined prototypes into full 3-day simulation through iterative cycles

To ensure pedagogical viability of the simulation, I led a fidelity and usability study with 20 faculty and graduate students:

- Defined key fidelity dimensions (physical, functional, psychological and social fidelity) and established benchmarks for evaluation

- Designed and implemented validated surveys to assess fidelity, alongside structured observations and interviews for deeper insights

- Analyzed data using qualitative (thematic analysis, codebook) and quantitative methods (t-tests, ANOVAs) to evaluate usability and pedagogical viability

- Mentored a junior researcher throughout the process, guiding VR iterations, data collection, and analysis

📈 Key output: Findings showed that the simulation approximated the real experience, with overall high fidelity and usability. However, social fidelity was found to be low — users reported that some interactions were unnatural, decreasing user trust and presence. Research insights led to:

- 2 new features (to reduce cognitive load) and 3 design changes (to enhance social fidelity)

- Full development of the VR-AI simulation

- Contribution to evidence-based design for educational VR (publication forthcoming)

Conducting usability sessions

Testing feature iterations

Programming mishap! Sometimes our codes don’t quite go as planned!

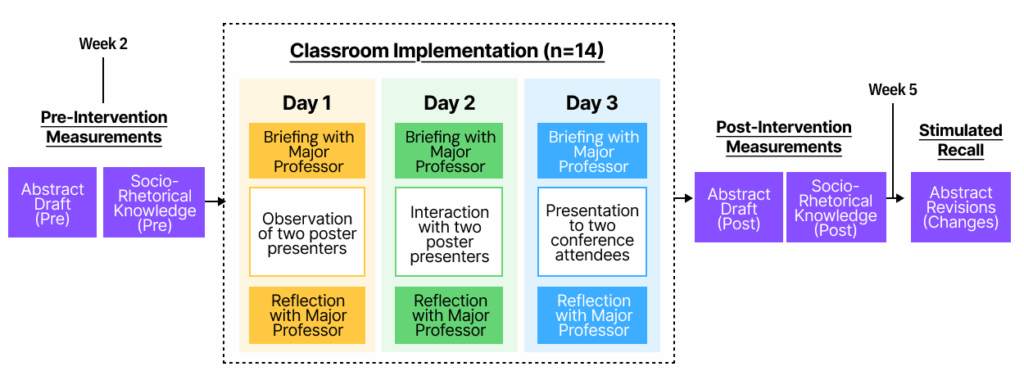

6️⃣ Deployed simulation and evaluated learning outcomes

The simulation was deployed in a graduate research writing course with 15 students. The goal was to evaluate whether the experience helped learners internalize the why behind effective communication and transfer those concepts into their writing:

- Evaluated learning outcomes using interviews, questionnaires, and stimulated recall; analyzed responses using comparative analysis and t-tests

- Assessed writing transfer through pre/post writing samples using textual analysis, holistic ratings, and t-tests

The Outcome

This work demonstrated that AI conversations, embedded in a VR environment, can effectively simulate real social interactions, making it great for situated learning. This work’s success led to:

- Faster skill acquisition: Accelerated graduate students’ research-writing development through authentic activities and feedback loops

- Reusable GPT framework: Codified a framework for customizing educational GPTs so instructors can tailor domain-specific requirements for their own contexts

- VR design guidelines: Authored practical guidelines (layout, fidelity thresholds, interaction patterns) for VR environments in situated and educational tasks

- Evaluative guidelines: Created validity and outcome criteria to evaluate immersive, interactive learning tools for classroom deployment

- Course adoption: Partnered with faculty; VR-AI simulation now embedded in graduate courses

- Institutional impact: Informed leadership strategy for VR/GenAI initiatives in graduate education and training